Last week DITA introduced us to the burgeoning area of alternative metrics and more specifically, the platform ‘Altmetric’, which offers academics an analytic tool to measure the “social impact” of the work they produce. The idea behind these metrics is to supplement the more traditional means of assessment of counting the number of citations with insight into how much attention each article is receiving online.

During our lab session, we began to experiment with the Altmetric Explorer and to familiarise ourselves with the distinctive ‘Altmetric donut’ which shows the altmetric ‘score’ and it’s breakdown of sources where the article has been referenced whether it be in tweets, blogs, new sources or any of the other sources it altmetrics vast database. Many of my DITA colleagues have written really good introductions to altmetrics which are definitely worth a look for a good grounding in it.

Carrying out searches and looking through the data on the site, it’s definitely interesting to see how an article reaches audiences. As an academic it’s easy to see how this could prove useful is tracking and widening the dissemination of your work. Whether or not that work is well received or even read, is where the altmetric idea fails, which is overtly recognised by the creators themselves as it’s not a failing which is readily solvable. On the site they are quick to highlight that,

“Altmetric measures attention, not quality. People pay attention to papers for all sorts of reasons, not all of them positive.”

The platform does make considerable effort to mitigate this by allocating more points in each Altmetric score to those sources which are of higher ‘quality’ themselves, therefore suggesting at the quality of the work itself. It’s not a fool-proof plan, but it would be very hard to see what would be.

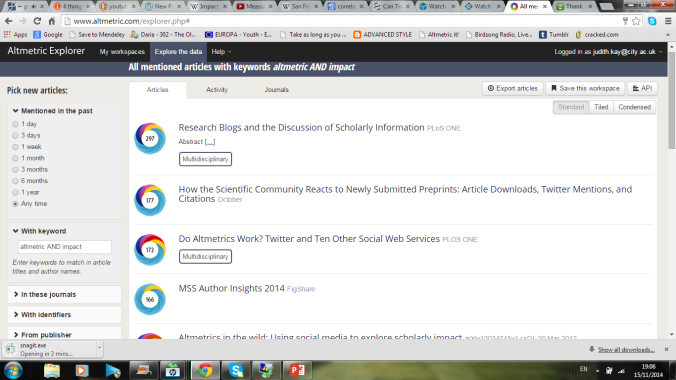

On beginning to write this post, I took a second look at Altmetric and decided to run a search on alternative metrics themselves, to see if there has been much written about them in the academic field and more particularly about how them measure ‘impact.’

Using the search toolbar I selected the keywords “altmetrics AND impact” which I thought may return a large scope of articles, of which any commenting on the effectiveness of altmetrics in measuring impact would be included.

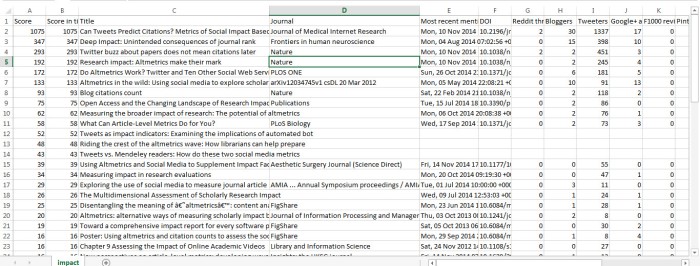

The result was a collection of sixty articles, which I exported into a CSV Excel document to better view the details of each entry. Notably, glancing through the journals column the majority of sources are scientific journals, or at least journals relating to health and the sciences primarily with just a few from information science specialist or humanities specific publications, which seems in tune with the critique that altmetrics have largely been adopted more by the science community than any other. It’s also quick to see that the majority of attention paid to the articles were as tweets or as items viewed via Mendeley.

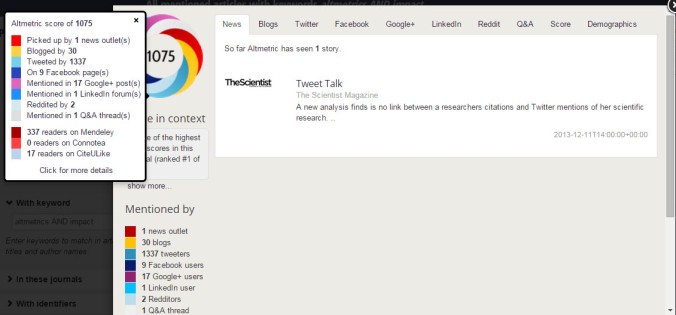

The first ‘hit’ on the list of data, fortunately, is the most relevant to my interests and looking at how the Altmetric score has been calculated is an interesting way to see how others have engaged with it. The number of tweets vastly outnumbers any of the other mentions is gains, with a total of 1337. Looking at the number of related blog posts and Mendeley readers, my first thought is how many of these is coming from the same person. One individual may have tweeted the article, read it on Mendeley and posted a blog referencing, which instantly reducing the scope for measuring how many individuals have engaged with the material (although of course these activities may have lead to others reading the article.) Plus, as mentioned above it also fails to show whether these references were positive or negative. All of this attention may have been a result of considering the content to be poorly considered or inaccurate.

I decided to take a closer look at these instances of attention to see whether the numbers could be a good indication that people are really engaging with the article and whether they were positive, negative or neutral. Much of the references are just neutral mentions that the article is there. It’s surprisingly difficult to find material which is actually commenting and critiquing the work. The best source in this respect appears to be in blogging. The select few of the 30 highlighted were more likely to comment on the article and give a perspective on it. In this sense, I think seeing the number of citations made to the articles in other academic outputs alongside these ‘alternative’ sources would be useful because at least it shows the article has been read and considered. It’ is all the more important then I think, to remember that altmetrics are a complimentary from of measuring impact and are should ideally be viewed in combination of other metric tools. Considering the lapse in time between publication and citation of an article, however, the benefit of at least being able to see if the word is out on your work is incredibly beneficial, but one can only hope and wait that others have picked up the signposts and taken the time to engage.

Interestingly the conclusion of this article is that tweets (or “tweetations”) can correlate positively with citations, though it does stress what I’ve covered here, that “social impact” is very different from knowledge uptake in academia. Even so, the authors remain optimistic for the future of altmetrics.

So on that note, here is a link to the article itself for anyone interested and hopefully an extra blog post mention may take it a small step towards further notice in the wider academic communities…

P.S

As a brief, while writing this entry I stumble upon a blog post about librarians and altmetrics, which may be useful to some of you: 4 Things Every Librarian Should Do With Altmetrics